Nine ways sexist AI is already discriminating against women in the real world

Technology has the potential to create a positive and better world, but damaging old toxic ways are being amplified and already causing many groups great harm in the real world writes Laura Bates, author of a new book ‘The New Age of Sexism’

When we think about the impact of AI on humanity, people rush to ask existential questions about human extinction and robots taking over the world. But instead of focusing on the dystopian warnings about what might happen, there is a form of serious discrimination that is already happening, with little to no guardrails or regulation to stop it.

This is affecting women and other marginalised groups in very real ways, from whether you will be accepted for a bank loan, to being put forward for a job or even getting the right diagnosis for a serious health problem. And unless we demand accountability now, there is a risk that AI will drag us backwards, as today’s inequality will be written into the building blocks of a future world. And the signs it is happening are already there...

Recruitment

It has recently been reported that 40 per cent of UK companies use AI in their hiring processes. This might sound like a harmless way to streamline a time-consuming recruitment process, especially when AI tools promise to whittle down a wide talent pool by selecting the candidates who will be the best “fit” for your company. That is, until you stop to consider how such AI models are trained. They ingest vast amounts of data to “guess” which applicants would be most likely to succeed, sometimes leading them (given existing inequality and underrepresentation in the workplace) to assume that white men, for example, are stronger candidates for senior roles.

Those profiting from this technology will answer that there’s an easy fix – hide the gender of applicants from the AI tool – but even then, they’ve been shown to discriminate by proxy instead: for example by identifying gendered keywords in CVs (think “netball”, or the name of an all-girls school), so women remain disadvantaged. Another recent study revealed that AI hiring tools might also make discriminatory assumptions about candidates based on patterns of speech.

Even before the point of applying, AI is already interfering with women’s chances of landing a job. Google’s advertising algorithms were found to be almost six times more likely to display adverts for high-paying executive jobs to male job seekers than female job seekers.

Generative content creation

ChatGPT already boasts 100 million monthly users. But like other large language models (LLMs), it works by ingesting vast data sets and using them to regurgitate “human-sounding” text or realistic images according to user prompts. When those data sets are already rife with existing prejudice, generative AI doesn’t just regurgitate the inequalities, it amplifies them. A Unesco study of the content created by popular AI platforms found “unequivocal evidence of bias against women in content generated”. For example, the models typically assigned high-status jobs like “engineer” or “doctor” to men, while relegating women to roles like “domestic servant” and even “prostitute”. This amplification of discriminatory narratives by LLMs is likely to become increasingly impactful as their usage snowballs: by the end of this year, it is estimated that up to 30 per cent of outbound marketing content from large organisations will be AI-generated (up from less than 2 per cent in 2022).

Loan applications

Globally, there is already a $17bn credit gap, which has an enormous impact on gender inequality. When women aren’t financially independent, the risk of myriad issues, from domestic abuse to forced marriage, dramatically increases. Data scientists and algorithm developers on the whole (US-based, male, and high-income) are not representative of the end customers being scored, yet they are shaping the end outcomes. As women have historically suffered from discrimination in lending decisions, the worry is that these are now being perpetuated by companies using credit scoring AI systems which has discrimination baked into them, risking further excluding them from loans and other financial services.

Criminal justice

A tool called Compas, used in many US jurisdictions to help make decisions about pre-trial release and sentencing, uses AI to “guess” how likely an offender is to be rearrested. But it’s trained on data about existing arrest records, in a country where systemic racism means that a Black person is five times as likely to be stopped by law enforcement without just cause as a white person. So even when the algorithm is “race blind”, it plays into a vicious cycle of racist incarceration.

Compas has also been found to overpredict the risk of women reoffending, thus leading to unfair sentencing of female felons, who are themselves often victims of physical or sexual abuse. In the UK, a study revealed that using AI to identify certain areas as hot spots for crime causes officers to expect trouble when on patrol in those locations, making them more likely to stop or arrest people out of prejudice rather than necessity.

Facial recognition

AI is playing a growing role in facial recognition technology, which has a broad range of real-world applications from policing to building access. But companies that promise their new tech offers “unprecedented convenience” might do well to ask themselves: convenience for whom? While this tech is already being widely adopted, it has been found to be dramatically more effective for some users than others: research showed that facial recognition products from major companies produced an up to 35 per cent error rate for dark-skinned women, compared to just 0.8 per cent for lighter-skinned men.

Healthcare

AI models built to predict liver disease from blood tests were twice as likely to miss the disease in women as in men, according to a UCL study. The lead author also warned that the widespread use in hospitals of such algorithms to assist with diagnosis could leave women receiving worse care. Meanwhile, in the US, a widely used healthcare algorithm that helped to determine which patients required additional attention was found to have a significant racial bias, favouring white patients over Black patients, even those who were sicker and had more chronic health conditions, according to research published in the journal Science. The authors estimated that the racial bias was so great that it reduced the number of Black patients identified for extra care by more than half.

Domestic abuse

Perpetrators of domestic abuse have been able to harass and intimidate their victims by using AI to access and manipulate wearable technology and smart-home devices. Everything from watches to TVs can facilitate remote monitoring and stalking, which is a considerable risk in light of the fact that, by 2030, it is estimated that some 125 billion devices will be connected to the “Internet of Things” which could lead to increasing surveillance by abusers looking to further control their victims.

Relationships

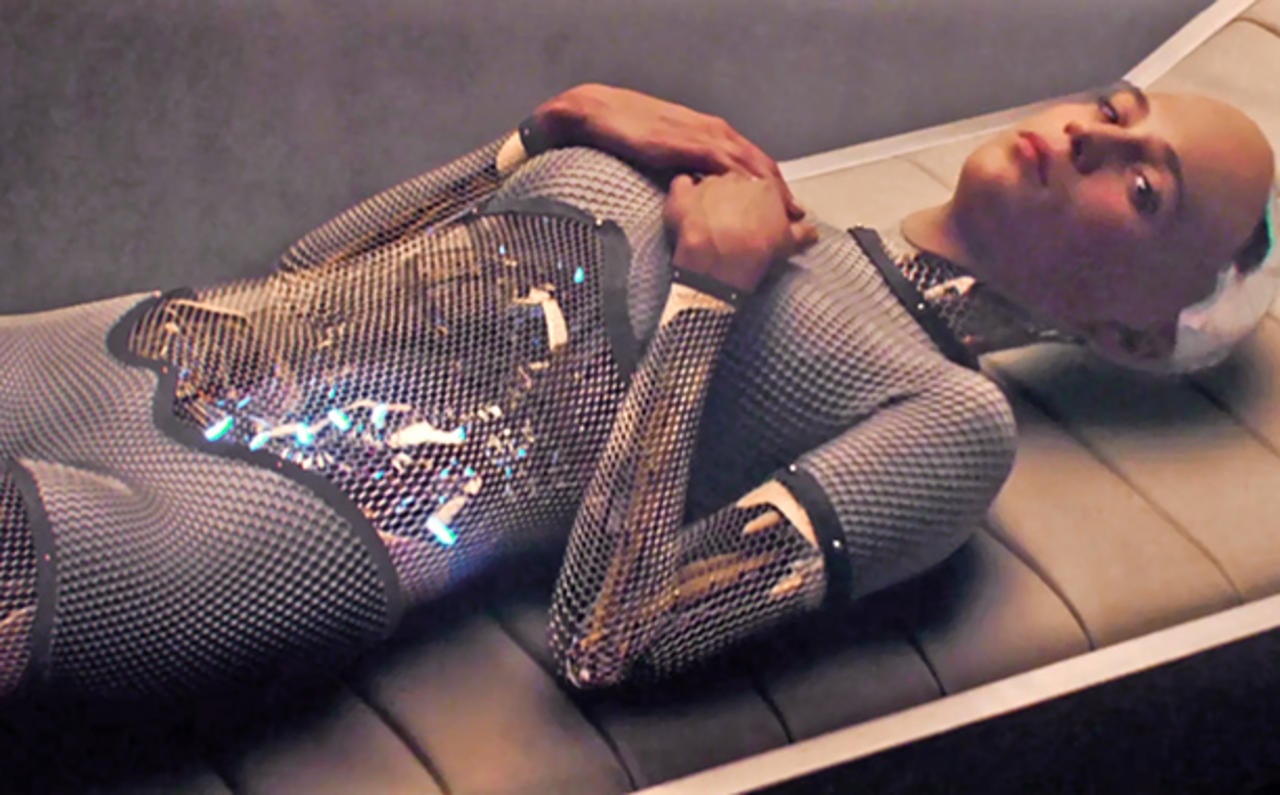

Just as we are grappling with how to overcome social media misogyny and its dehumanising impact on women, hundreds of millions of people are downloading AI “companion” apps, which are turning women back into “yes” objects for male consumption. Often advertised by their creators as being superior to real women (all the fun, but none of that pesky free will), these AI girlfriends apps or chatbots, give men immediate access to a customisable, subservient, flattering, always available life-like “woman” who can be used (and abused) as much as a man wants. In fact, many men abuse them and then share the screen shots of them doing so with each other online to see who can do the most awful and depraved thing to them. Last year alone, in the Google Android Play Store, the top 11 AI chatbots in the Google Android Playstore have a combined 100 million downloads. This is neither a win for lonely men nor the women they come into contact with afterwards.

If we want to address the rife inequality in AI, we will need diverse teams of people to do it. The tech itself isn’t inherently misogynistic, but it often has a number of these unintended consequences, not only because of biased and faulty data sets, but also because of a lack of diversity in the teams who create and profit from it. At present, women are dramatically underrepresented in every aspect of artificial intelligence research, development and uptake. Globally, just 12 per cent of AI researchers are women, and even though they are spearheading some of the most exciting efforts to create safe and ethical AI, female-led teams are still being awarded six times less venture capital funding than their male counterparts.

AI promises a glittering new future which will have a real-world positive impact. But unless we prioritise equity and safety at the design stage, where discriminatory and flawed thinking is exposed and rectified, it risks perpetuating harmful bias and dragging many of us back to the dark ages. Let’s hope someone is paying attention to the small print.

‘The New Age of Sexism’ by Laura Bates is out on 15 May

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies

Comments